- Sites That Allow Web Scraping Tools

- Web Scraping Sites

- Sites That Allow Web Scraping Using

- Scraping Meaning Web

Websites also tend to monitor the origin of traffic, so if you want to scrape a website if Brazil, try to not do it with proxies in Vietnam. But from experience, I can tell you that rate is the most important factor in “Request Pattern Recognition”, so the slower you scrape, the less chance you have of being discovered. Regardless of how site owners see it, the practice of web scraping has come to stay, and unless you cross some lines of technicalities, web scraping is completely legal. However, because sites are fighting it, you need to go the extra mile to be able to extra the data you have interest in successfully.

As your business scales up, it is necessary to take the data extractionprocess to the next level and scrape data at a large scale. However, scraping a large amount of data from websites isn't an easy task. You may encounter a few challenges that would hold you up from getting a significant amount of data from various sources automatically.

Table of Content:

Roadblocks while undergoing web scraping at scale:

from The Lazy Artist Gallery

1. Dynamic website structure:

It is easy to scrape HTML web pages. However, many websites now rely heavily on Javascript/Ajax techniques for dynamic content loading. Both of them require all sort of complex libraries that cumbersome web scrapers from obtaining data from such websites

2. Anti-scraping technologies:

Such as Captcha and behind-the-log-in serve as surveillance to keep spam away. However, they also pose a great challenge for a basic web scraper to get passed. As such anti-scraping technologies apply complex coding algorithms, it takes a lot of effort to come up with a technical solution to workaround. Some may even need a middleware like 2Captcha to solve.

3. Slow loading speed:

The more web pages a scraper needs to go through, the longer it takes to complete. It is obvious that scraping at a large scale will take up a lot of resources on a local machine. A heavier workload on the local machine might lead to a breakdown.

4. Data warehousing:

A Large scale extraction generates a huge volume of data. This requires a strong infrastructure on data warehousing to be able to store the data securely. It will take a lot of money and time to maintain such a database.

Although these are some common challenges of scraping at large scale, Octoparsealready helped many companies overcome such issues. Octoparse’s cloud extraction is engineered for large scale extraction.

Cloud extraction to scrape websites at scale

Cloud extraction allows you to extract data from your target websites 24/7 and stream into your database, all automatically. The one obvious advantage? You don’t need to sit by your computer and wait for the task to get completed.

But...there are actually more important things you can achieve with cloud extraction. Let me break them down into details:

1. Speediness

In Octoparse, we call a scraping project a “task”. With cloud extraction, you can scrape as many as 6 to 20 times faster than a local run.

This is how Cloud extraction works. When a task is created and set to run on the cloud, Octoparse sends the task to multiple cloud servers that then go on to perform the scraping tasks concurrently. For example, if you are trying to scrape product information for 10 different pillows on Amazon, Instead of extracting the 10 pillows one by one, Octoparse initiates the task and send it to 10 cloud servers, each goes on to extract data for one of the ten pillows. In the end, you would get 10 pillows data extracted in 1/10th of the time if you were to extract the data locally.

This is apparently an over-simplified version of the Octoparse algorithm, but you get the idea.

2. Scrape more websites simultaneously

Cloud extraction also makes it possible to scrape up to 20 websites simultaneously. Following the same idea, each website is scraped on a single cloud server that then sends back the extracted to your account.

You can set up different tasks with various priorities to make sure the websites will be scraped in the order preferred.

3. Unlimited cloud storage

During a cloud extraction, Octoparse removes duplicated data and stored the clean data in the cloud such that you can easily access the data at any time, anywhere and there’s no limit to the amount of data you can store. For an even more seamless scraping experience, integrate Octoparse with your own program or database via API for managing your tasks and data.

4. Schedule runs for regular data extraction

If you're gonna need regular data feeds from any websites, this is the feature for you. With Octoparse, you can easily set your tasks to run on schedule, daily, weekly, monthly or even at any specific time of each day. Once you finish scheduling, click 'Save and Start'. The task will run as scheduled.

5. Less blocking

Cloud extraction reduces the chance of being blacklisted/blocked. You can use IP proxies, switch user-agents, clear cookies, adjust scraping speed.etc.

Tracking web data at a large volume such as social media, news, and e-commerce websites will elevate your business performance with>

Ashley is a data enthusiast and passionate blogger with hands-on experience in web scraping. She focuses on capturing web data and analyzing in a way that empowers companies and businesses with actionable insights. Read her blog here to discover practical tips and applications on web data extraction

Artículo en español: Cómo scrape sitio web a gran escala (guía 2020)

También puede leer artículos de web scraping en El Website Oficial

Web scraping is the process of extracting data that is available on the web using a series of automated requests generated by a program.

It is known by a variety of terms like screen scraping, web harvesting, and web data extracting. Indexing or crawling by a search engine bot is similar to web scraping. A crawler goes through your information for the purpose of indexing or ranking your website against others, whereas, during scraping, the data is extracted to replicate it elsewhere, or for further analysis.

A crawler also strictly follows the instructions that you list in your robots.txt file, whereas, a scraper may totally disregard those instructions.

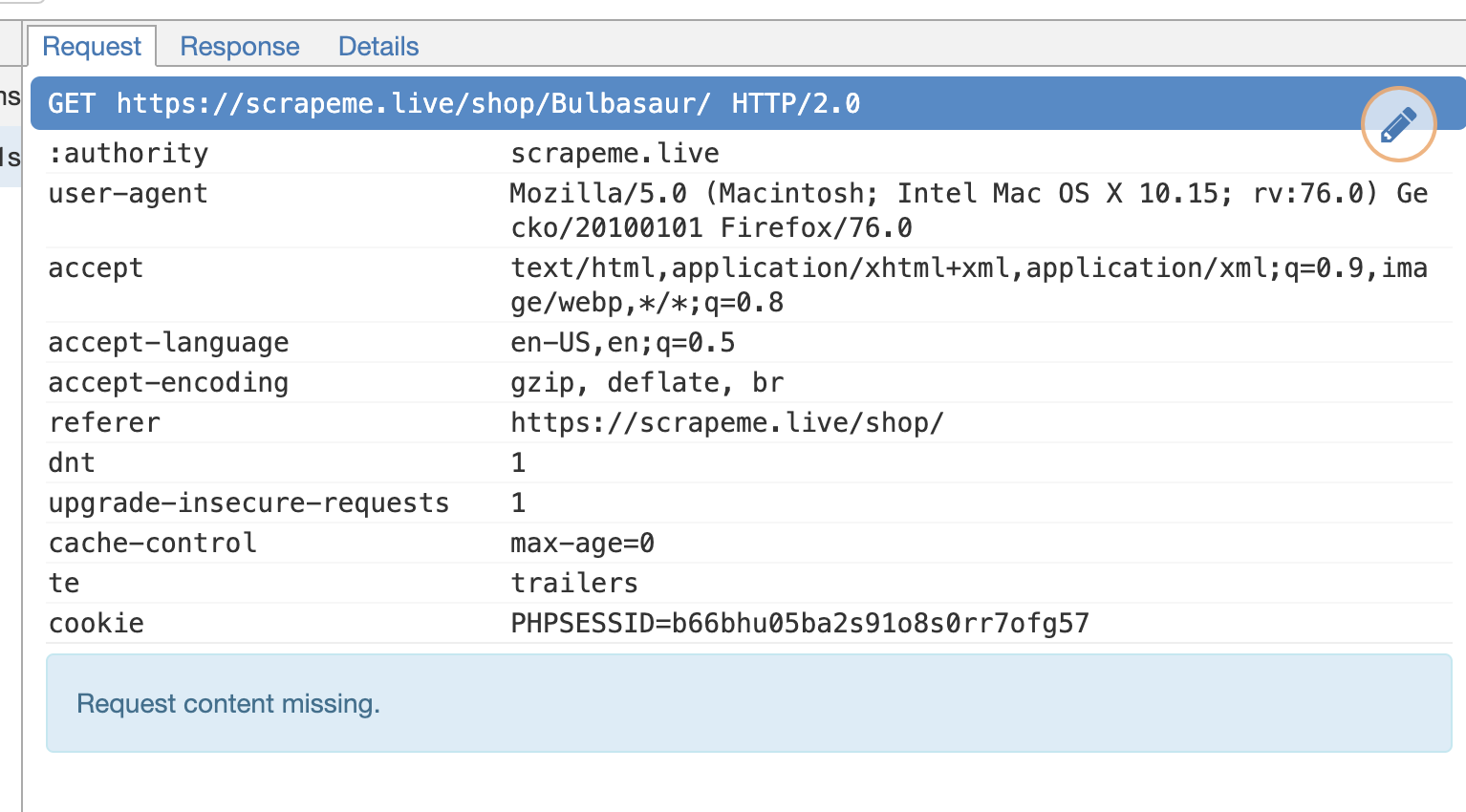

During the process of web scraping, an attacker is looking to extract data from your website - it can range from live scores, weather information, prices or even whole articles. The ideal way to extract this data is to send periodic HTTP requests to your server, which in turn sends the web page to the program.

The attacker then parses this HTML and extracts the required data. This process is then repeated for hundreds or thousands of different pages that contain the required data. An attacker might use a specially written program targeting your website or a tool that helps scraping a series of pages.

Technically, this process may not be illegal as an attacker is just extracting information that is available to him through a browser, unless the webmaster specifically forbids it in the terms and conditions of the website. This is a gray area, where ethics and morality come into play.

As a webmaster, you should, therefore, be equipped to prevent attackers from getting your data easily. Uncontrolled scraping in the form of an overwhelming number of requests at a time may also lead to a denial of service (DoS) situation, where your server and all services hosted on it become unresponsive.

The top companies that are targeted by scrapers are digital publishers (blogs, news sites), e-commerce websites (for prices), directories, classifieds, airlines and travel (for information). Scraping is bad for you as it can lead to a loss of competitive advantage and therefore, a loss of revenue. In the worst case, scraping may lead to your content being duplicated elsewhere and lead to a loss of credibility for the original source. From a technological point of view, scraping may lead to excess pressure on your server, slowing it down and eventually inflating your bills too!

Sites That Allow Web Scraping Tools

Since we have established that it is good to forbid web scrapers from accessing your website, let us discuss a few ways through which you can take a strong stand against potential attackers. Before we proceed, you must know that anything that is visible on the screen can be scraped and there is no absolute protection, however, you can make web scraper's life harder.

Take a Legal Stand

The easiest way to avoid scraping is to take a legal stand, whereby you mention clearly in your terms of service that web scraping is not allowed. For instance, Medium’s terms of service contain the following line:

Crawling the Services is allowed if done in accordance with the provisions of our robots.txt file, but scraping the Services is prohibited.

You can even sue potential scrapers if you have forbidden it in your terms of service. For instance, LinkedIn sued a set of unnamed scrapers last year, saying that extracting user data through automated requests amounts of hacking.

Prevent denial of service (DoS) attacks

Even if you have put up a legal notice prohibiting scraping of your services, a potential attacker may still want to go ahead with it, leading to a denial of service at your servers, disrupting your daily services. In such cases, you need to be able to avoid such situations.

You can identify potential IP addresses and block requests from reaching your service by filtering through your firewall. Although it’s a manual process, modern cloud service providers give you access to tools that block potential attacks. For instance, if you are hosting your services on Amazon Web Services, the AWS Shield would help protect your server from potential attacks.

Use Cross Site Request Forgery (CSRF) tokens

By using CSRF tokens in your application, you'll prevent automated tools making arbitrary requests to guest URLs. A CSRF token may be present as a session variable, or as a hidden form field. To get around a CSRF token, one needs to load and parse the markup and search for the right token, before bundling it together with the request. This process requires either programming skills and the access to professional tools.

Using .htaccess to prevent scraping

.htaccess is a configuration file for your Apache web server, and it can be tweaked to prevent scrapers from accessing your data. The first step is to identify scrapers, which can be done through Google Webmasters or Feedburner. Once you have identified them, you can use many techniques to stop the process of scraping by changing the configuration file.

In general, the .htaccess file is not enabled on Apache and it needs to be enabled, after which Apache would interpret .htaccess files that you place in your directory.

.htaccess files can only be created for Apache, but we would provide equivalents for Nginx and IIS for our examples too. A detailed on converting rewrite rules for Nginx can be found in the Nginx documentation.

Prevent hotlinking

When your content is scraped, inline links to images and other files are copied directly to the attacker’s site. When the same content is displayed on the attacker’s site, such a resource (image or another file) directly links to your website. This process of displaying a resource that is hosted on your server on a different website is called hotlinking.

When you prevent hotlinking, such an image, when displayed on a different site does not get served by your server. By doing so, any scraped content would be unable to serve resources hosted on your server.

In Nginx, hotlinking can be prevented by using a location directive in the appropriate the configuration file (nginx.conf). In IIS, you need to install URL Rewrite and edit the configuration (web.config) file.

Blacklist or Whitelist specific IP addresses

If you have identified the IP addresses or patterns of IP addresses that are being used for scraping, you can simply block them through your .htaccess file. You may also selectively allow requests from specific that you have whitelisted.

In Nginx, you can use the ngx_http_access_module to selectively allow or deny requests from an IP address. Similarly, in IIS, you can restrict IP address accessing your services by adding a Role in the Server Manager.

Throttling requests

Alternately you may also limit the number of requests from one IP address, but it may not be useful if an attacker has access to multiple IP addresses. A captcha may also be used in case of abnormal requests from an IP address.

You may also want to block access from known cloud hosting and scraping service IP addresses to make sure an attacker is unable to use such a service to scrape your data.

Create 'honeypots'

A “honeypot” is a link to fake content that is invisible to a normal user, but that is present in the HTML which would come up when a program is parsing the website. By redirecting a scraper to such honeypots, you can detect scrapers and make them waste resources by visiting pages that contain no data.

Do not forget to disallow such links in your robots.txt file to make sure a search engine crawler does not end up in such honeypots.

Change DOM structure frequently

Most scrapers parse the HTML that is retrieved from the server. To make it difficult for scrapers to access the required data, you can frequently change the structure of the HTML Doing so would require an attacker to evaluate the structure of your website again in order to extract the required data.

Provide APIs

As Medium’s terms of service say, you can selectively allow extracting data from your site by making certain rules. One way is to create subscription-based APIs to monitor and give access to your data. Through APIs, you would also be able to monitor and restrict usage to the service.

Report attacker to search engines and ISPs

If all else fails, you may report a web scraper to a search engine so that they delist the scraped content, or to the ISPs of the scrapers to make sure they block such requests.

Web Scraping Sites

Conclusion

Sites That Allow Web Scraping Using

Scraping Meaning Web

A fight between a webmaster and a scraper is a continuous one and each must stay vigilant to make sure they remain a step ahead of the other.

All the solutions provided in this article can be bypassed by someone with a lot of tenacity and resources (as anything that is visible can be scraped), but it's a good idea to remain careful and keep monitoring traffic to make sure that your services are being used in a way you intended them to be.